CINXE.COM

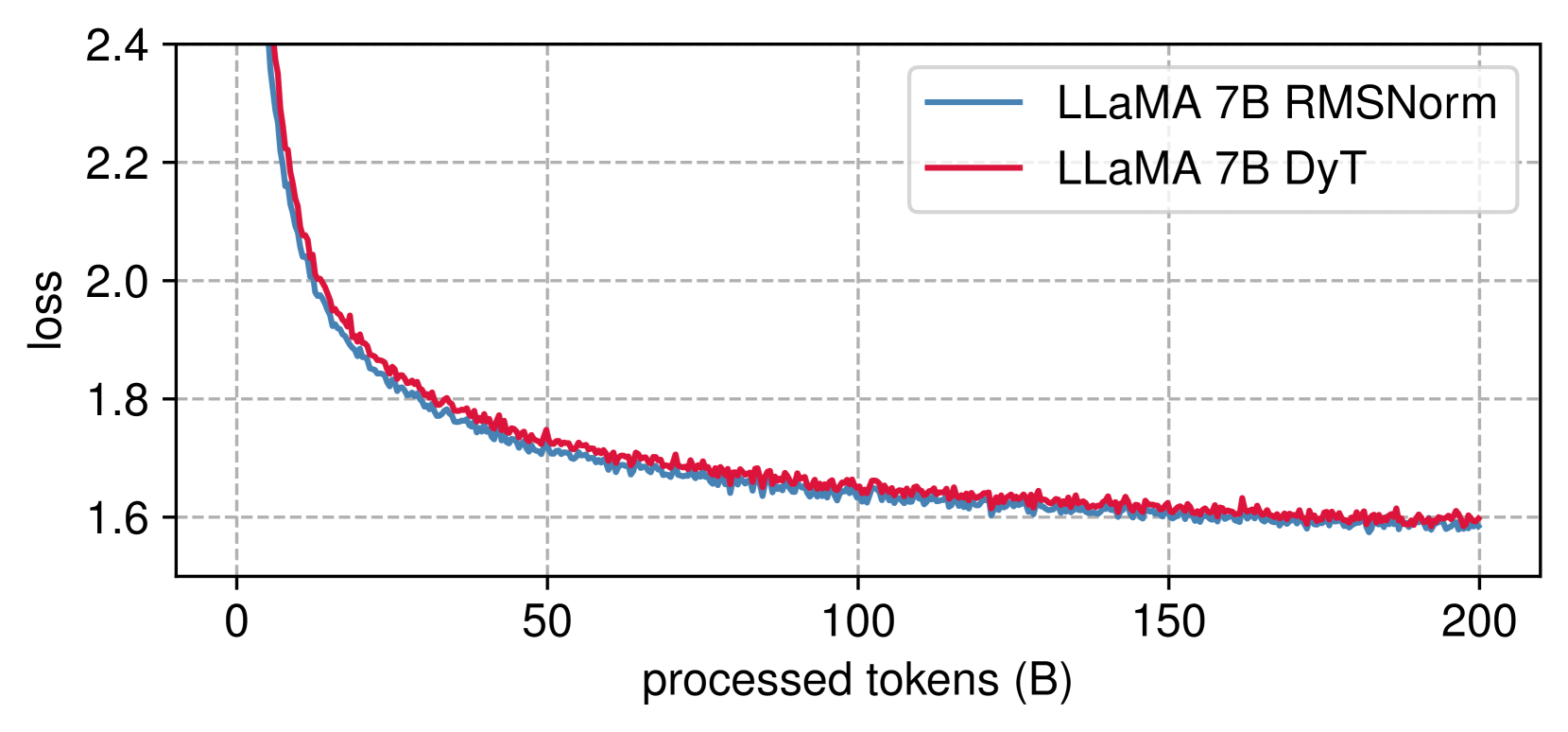

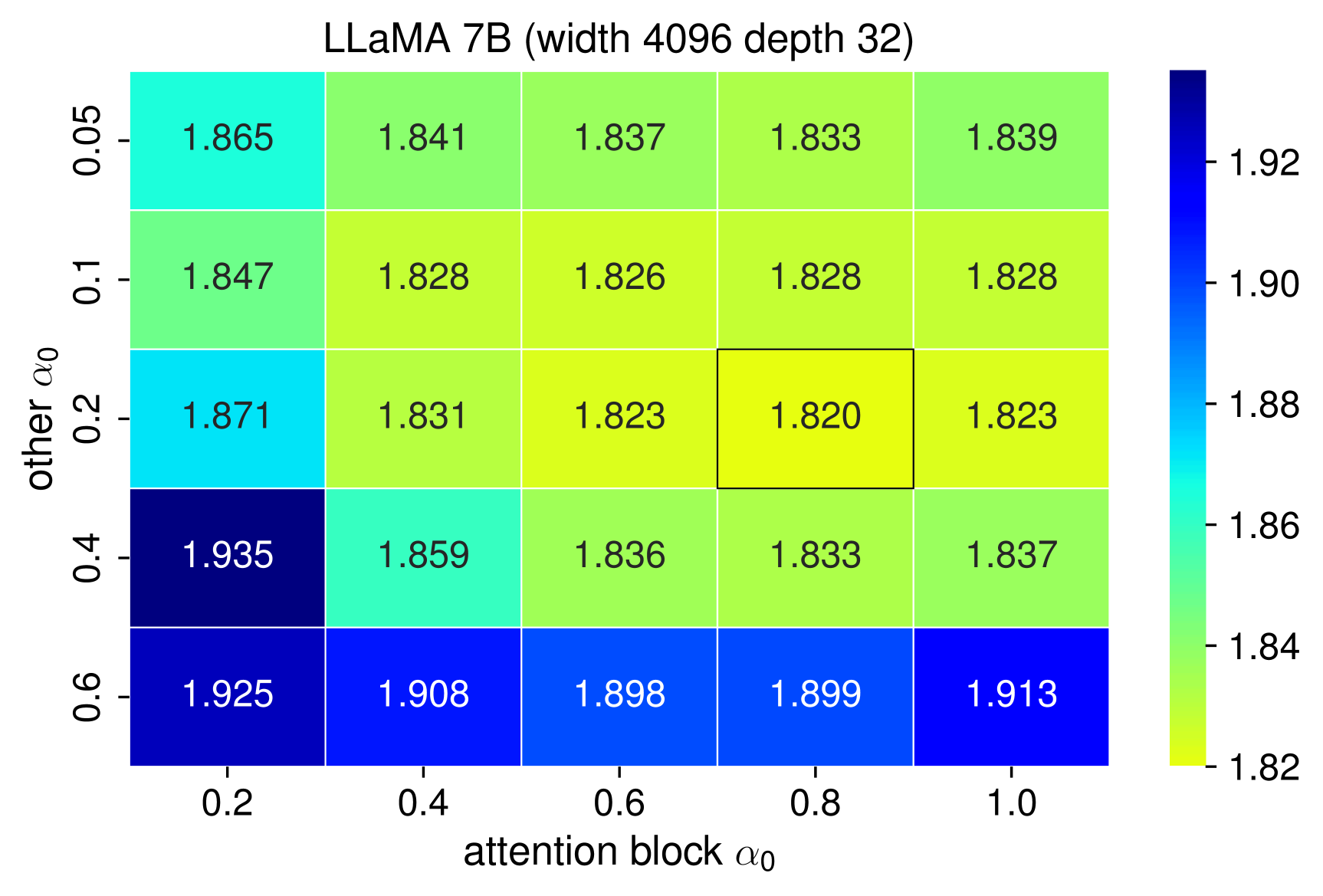

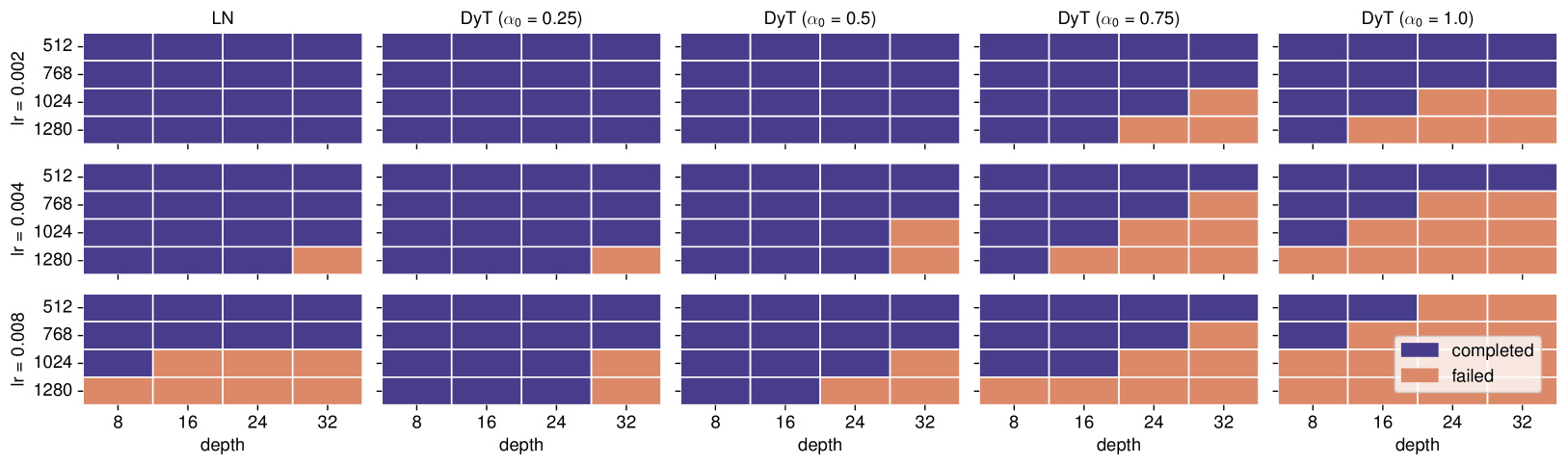

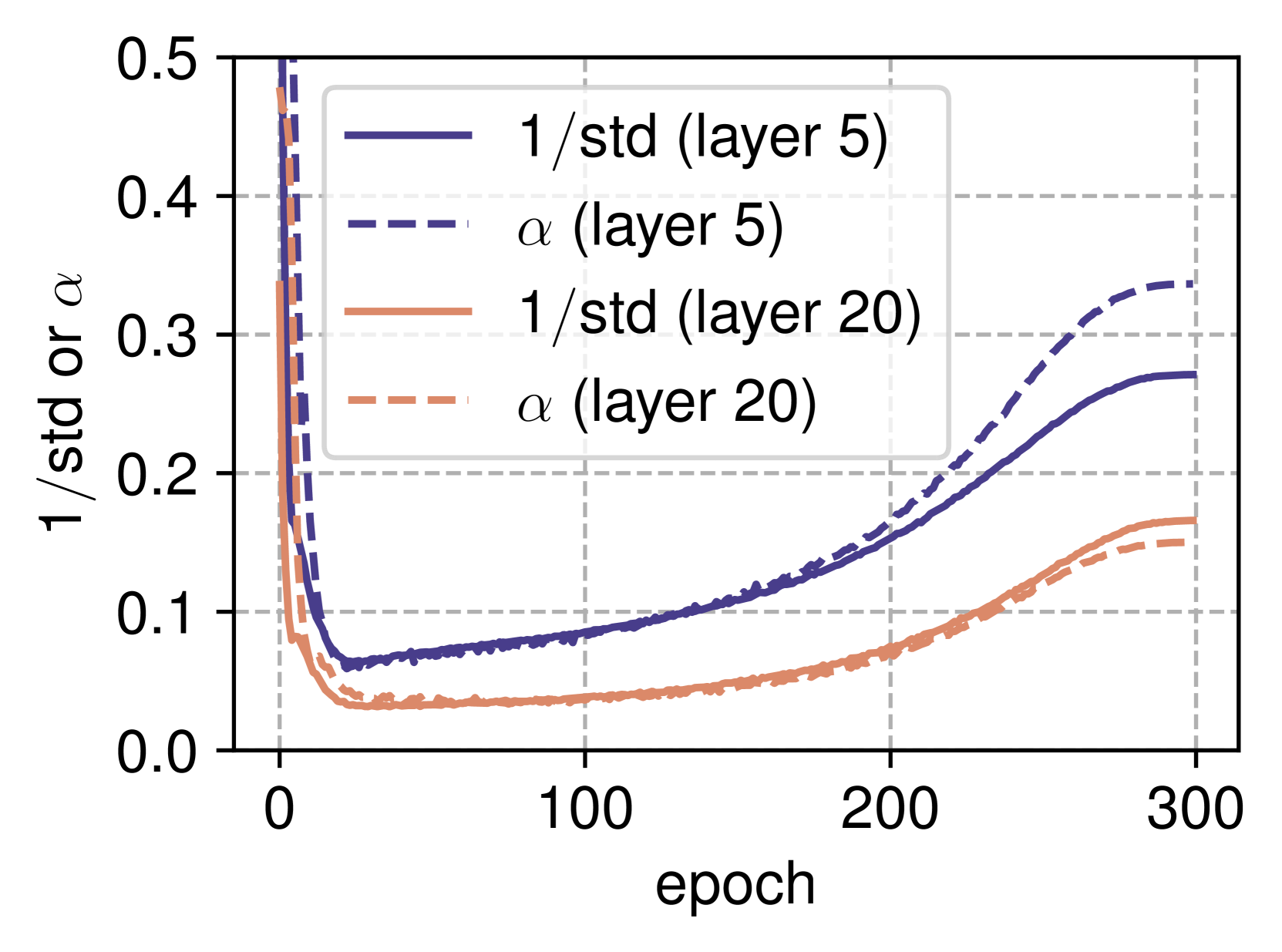

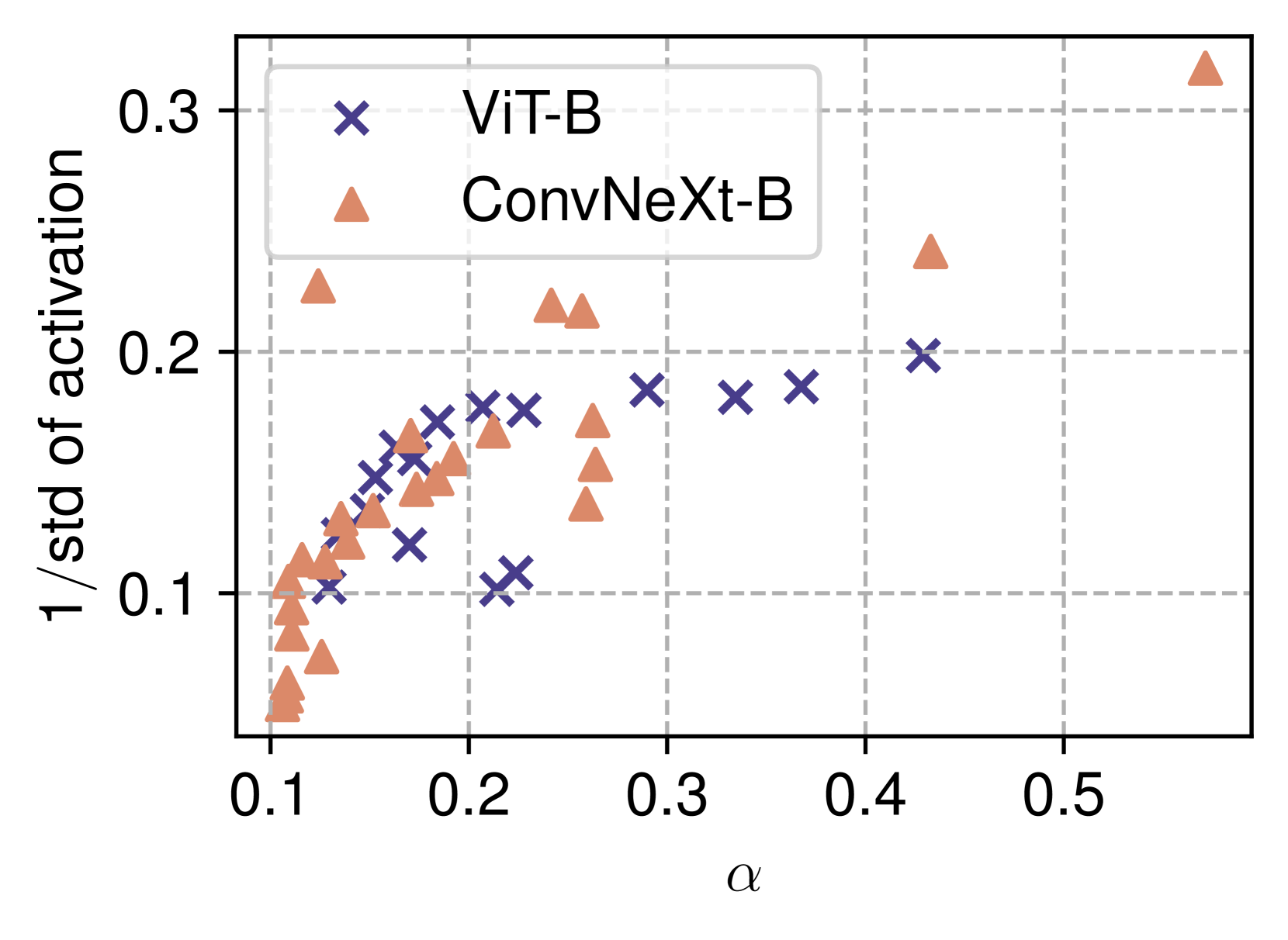

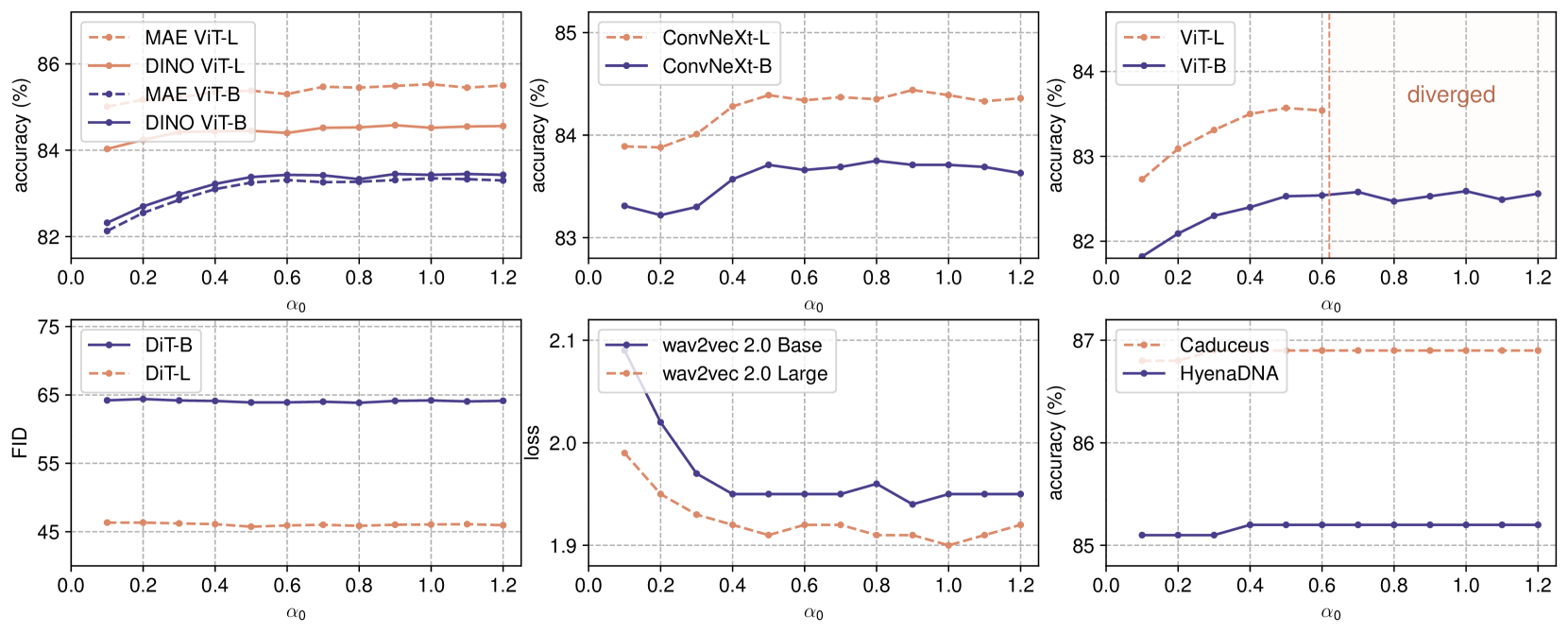

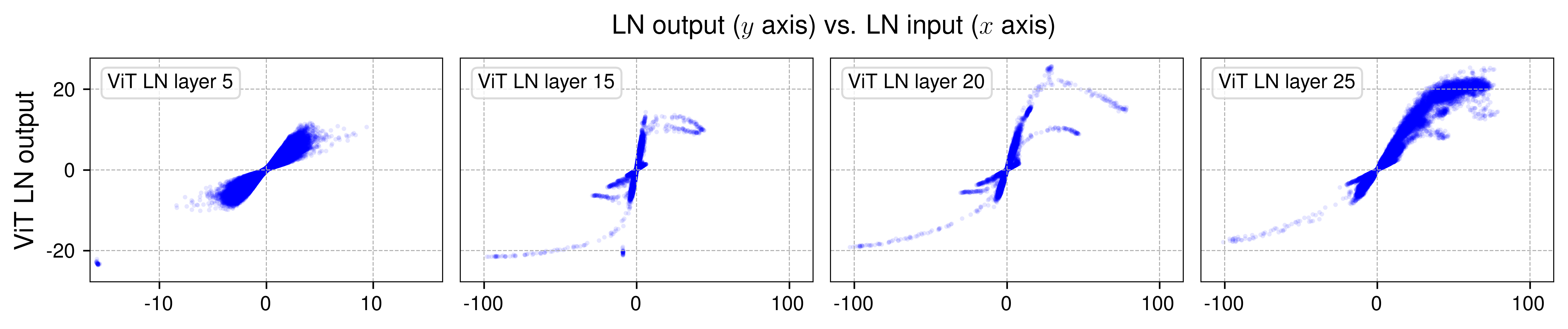

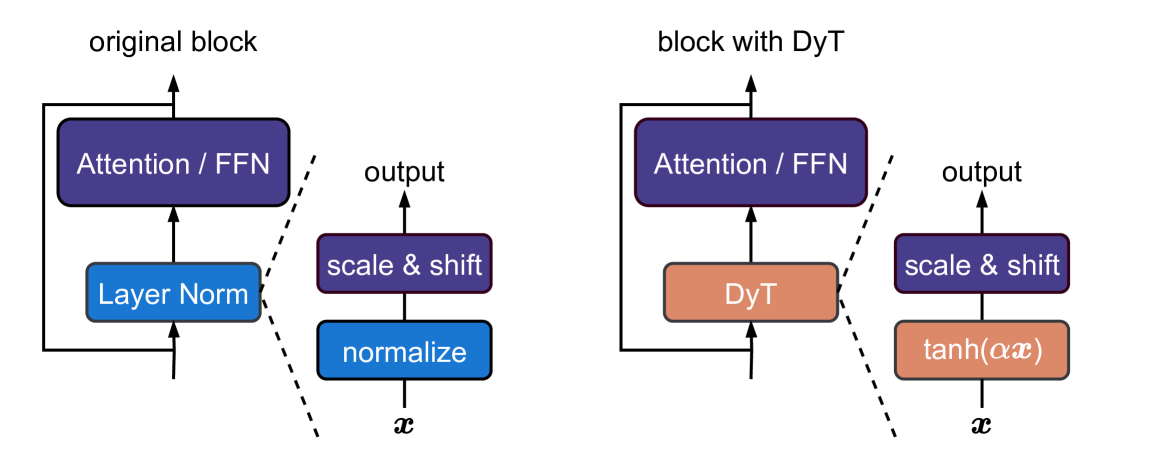

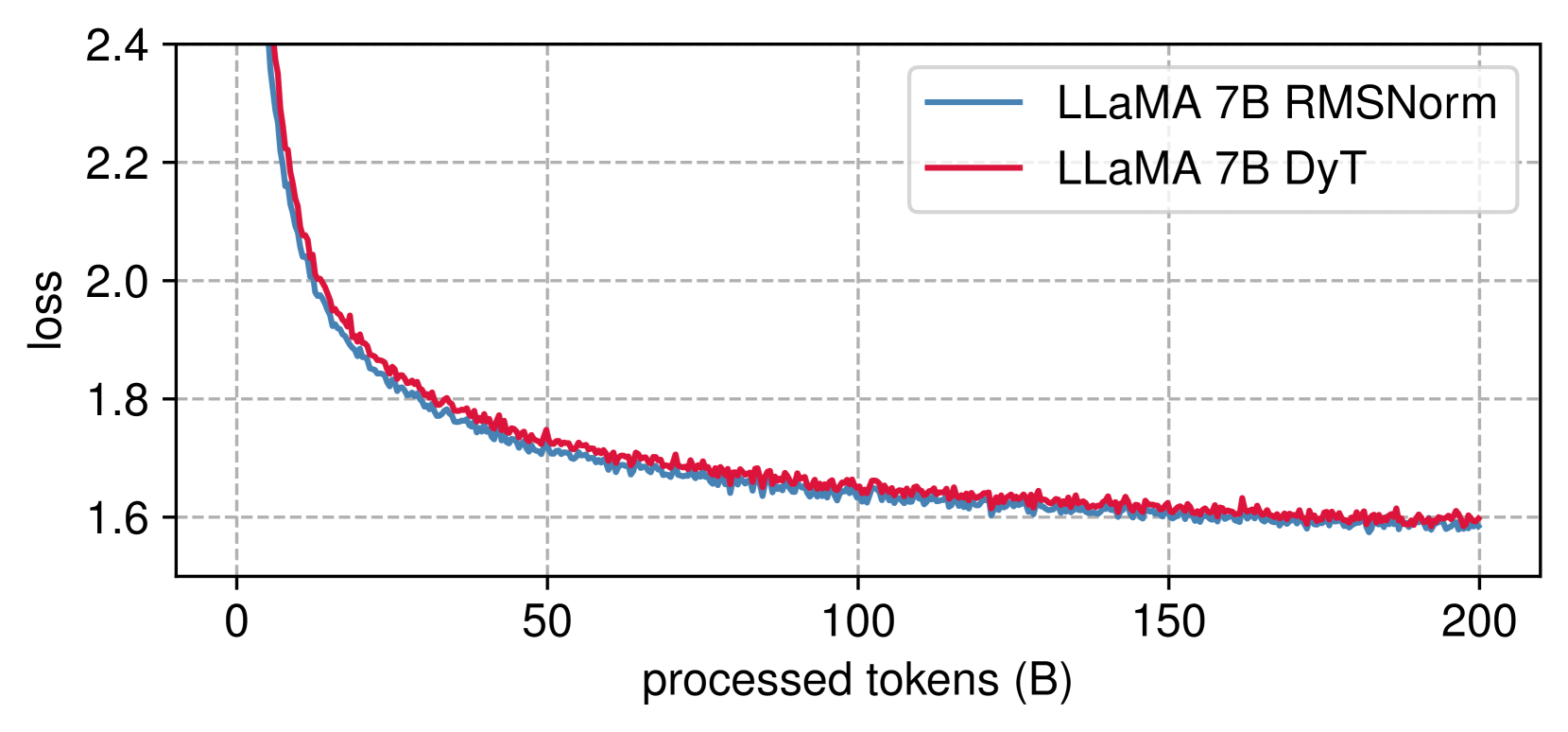

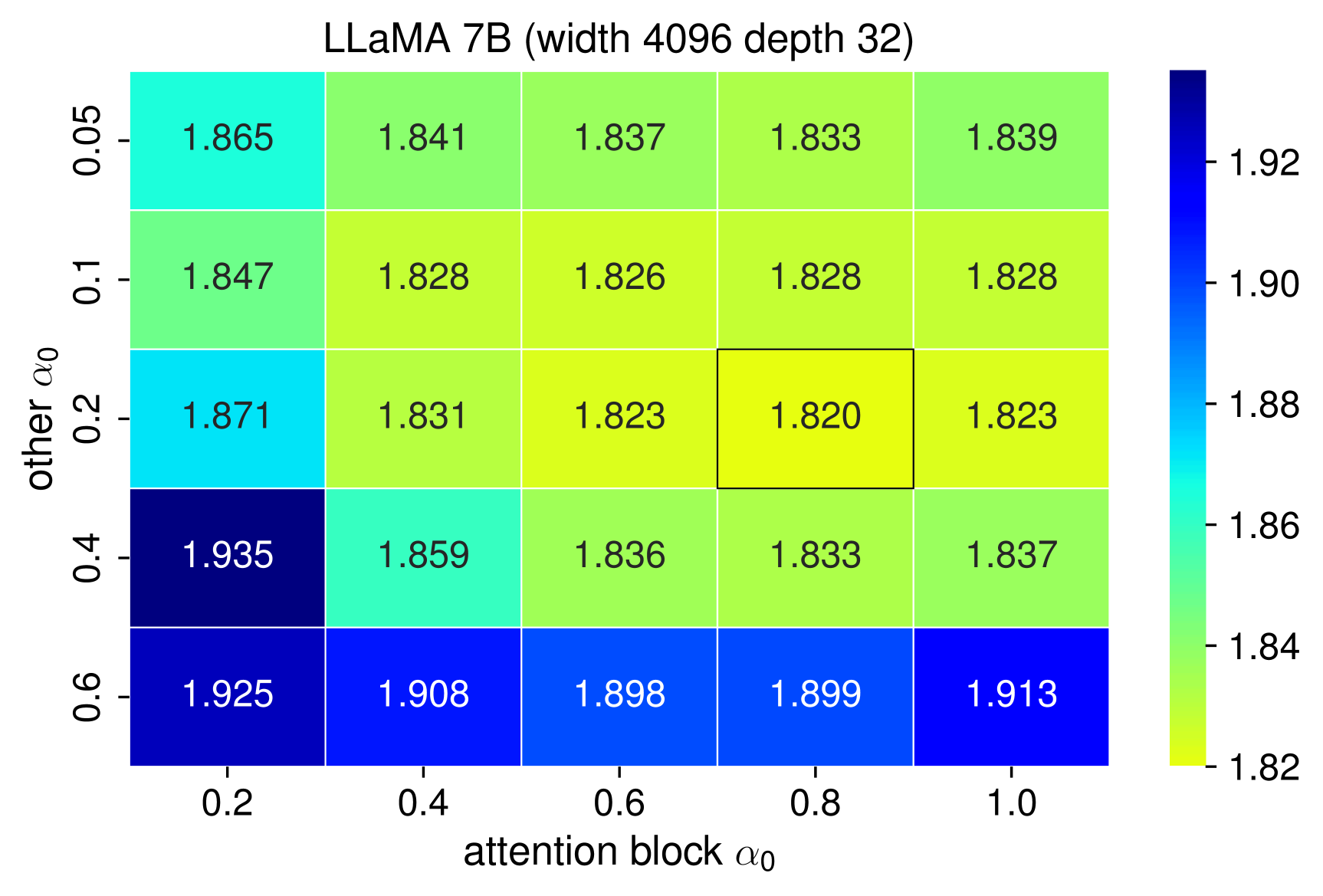

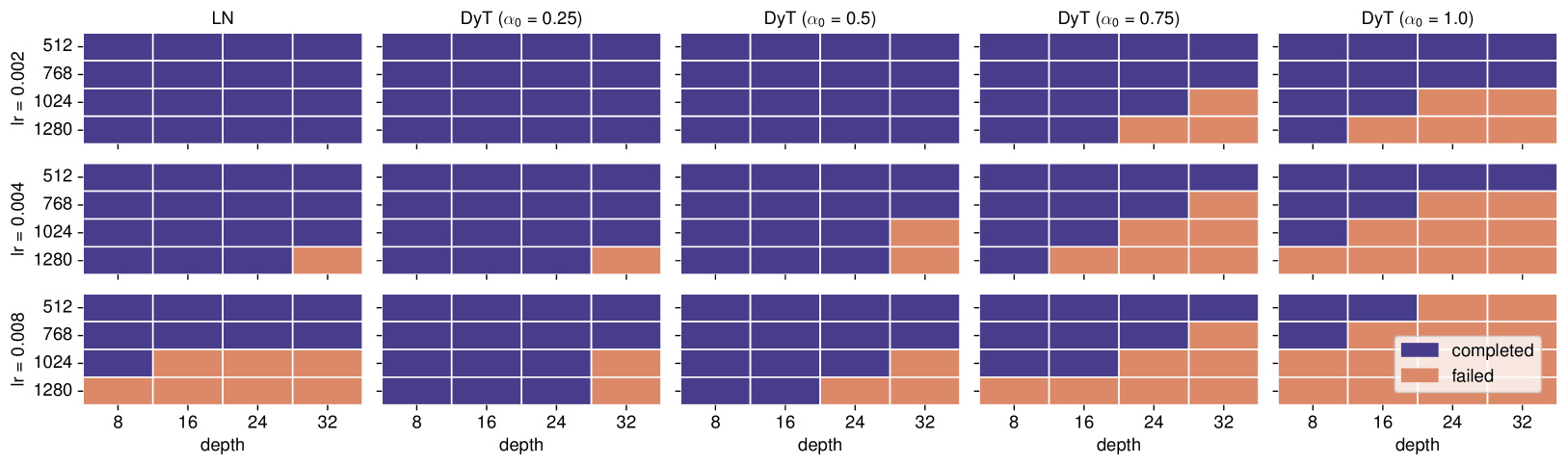

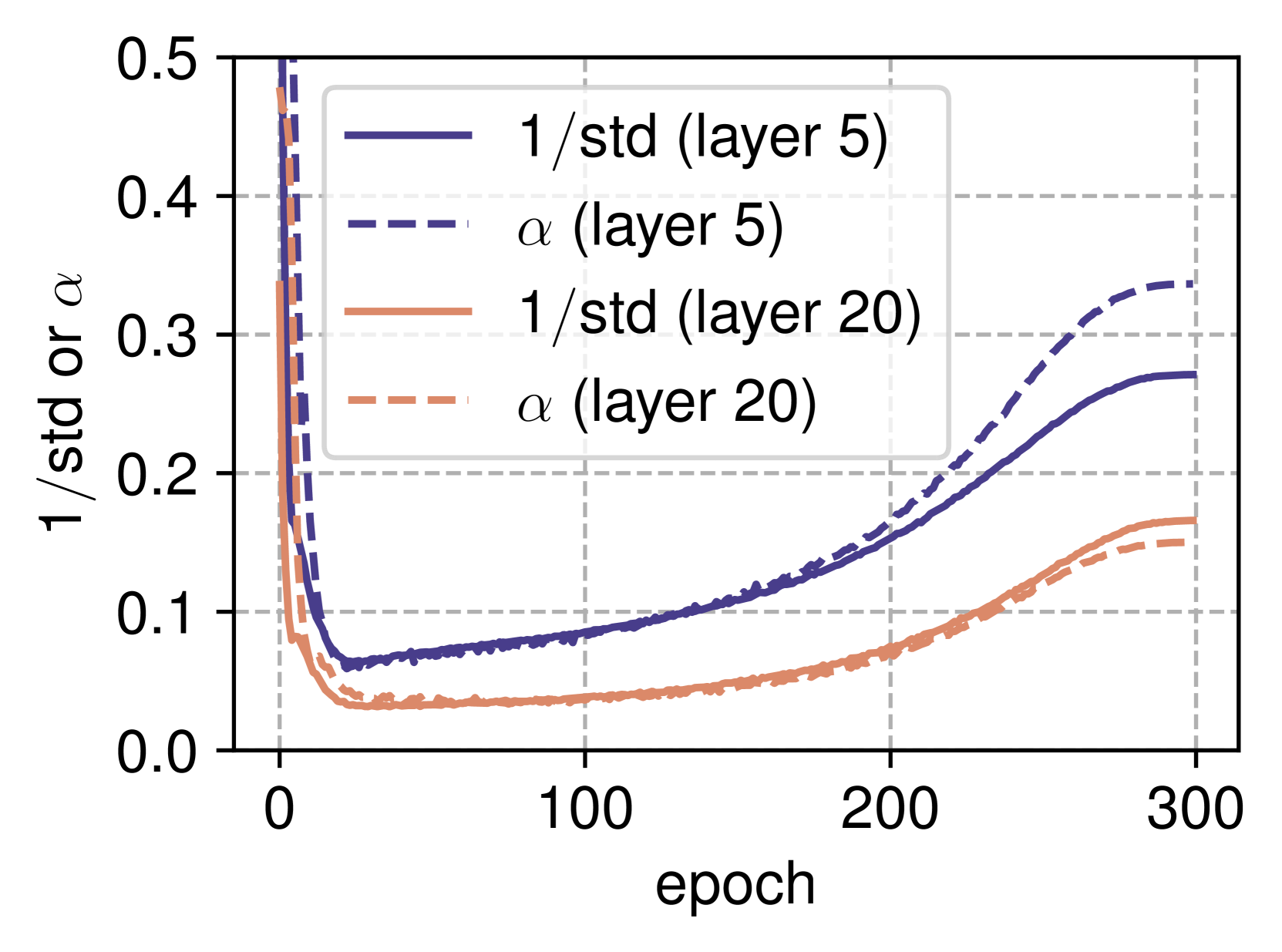

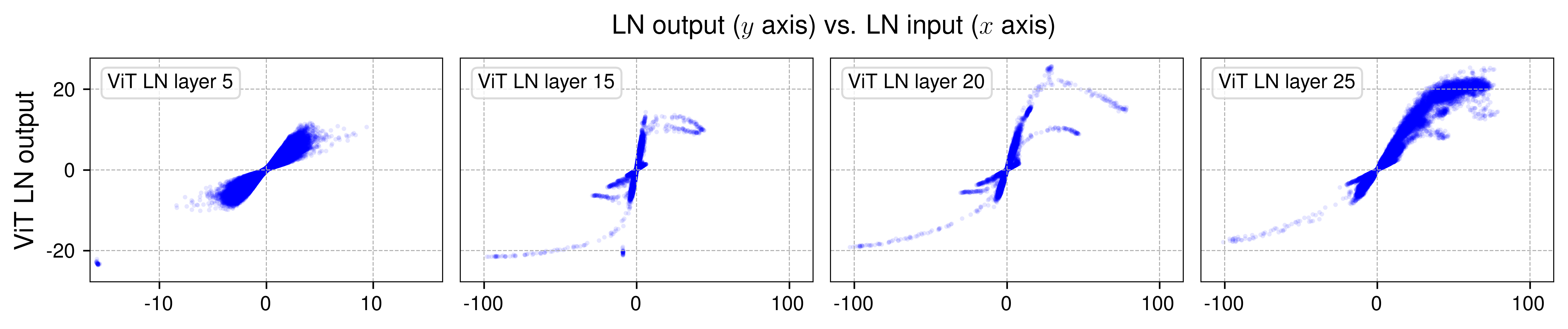

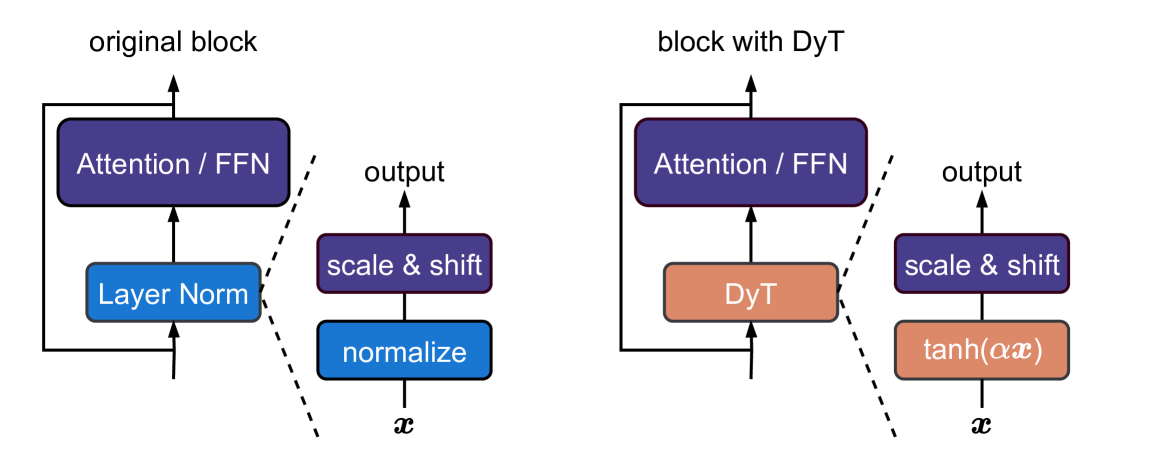

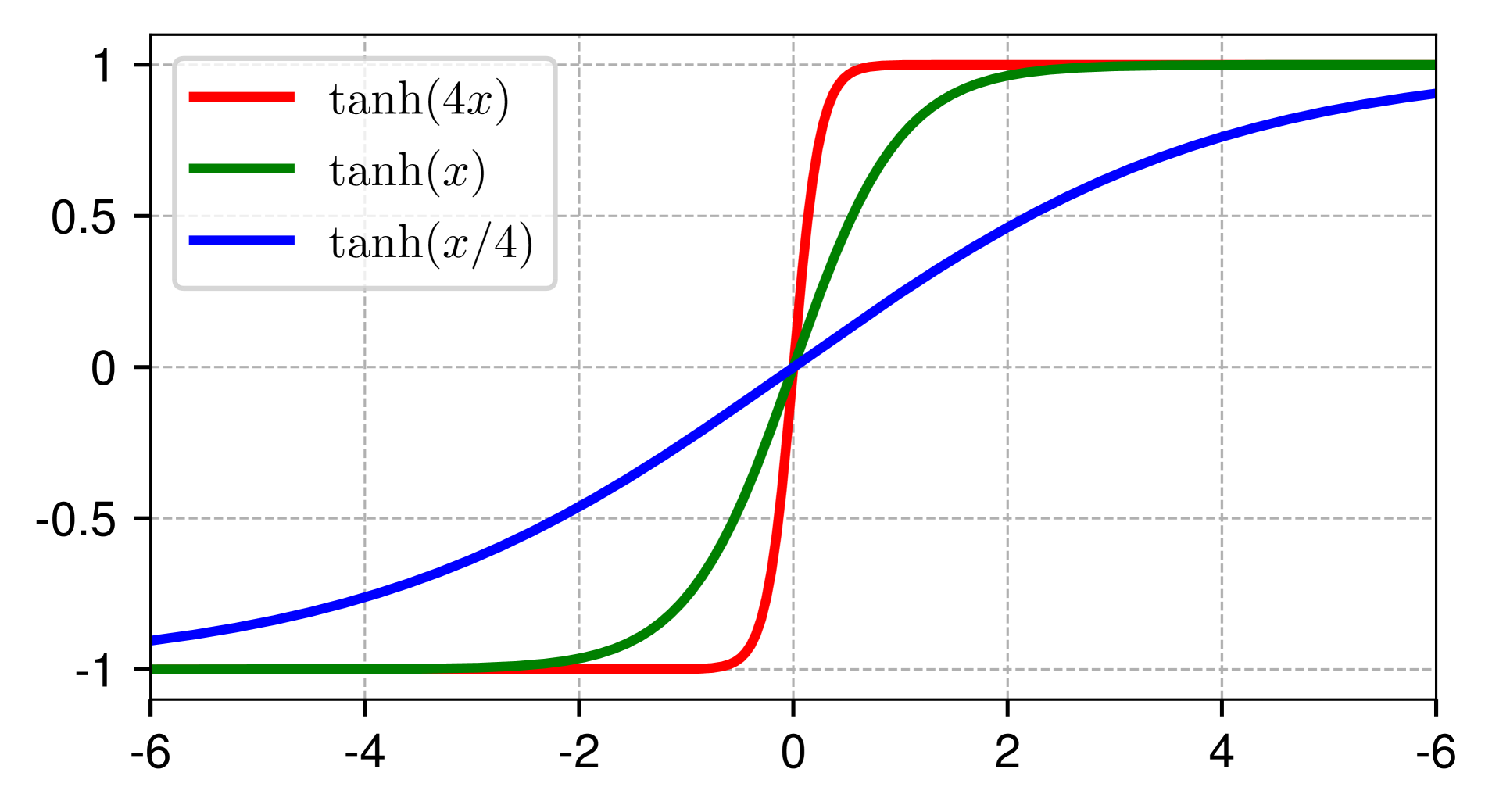

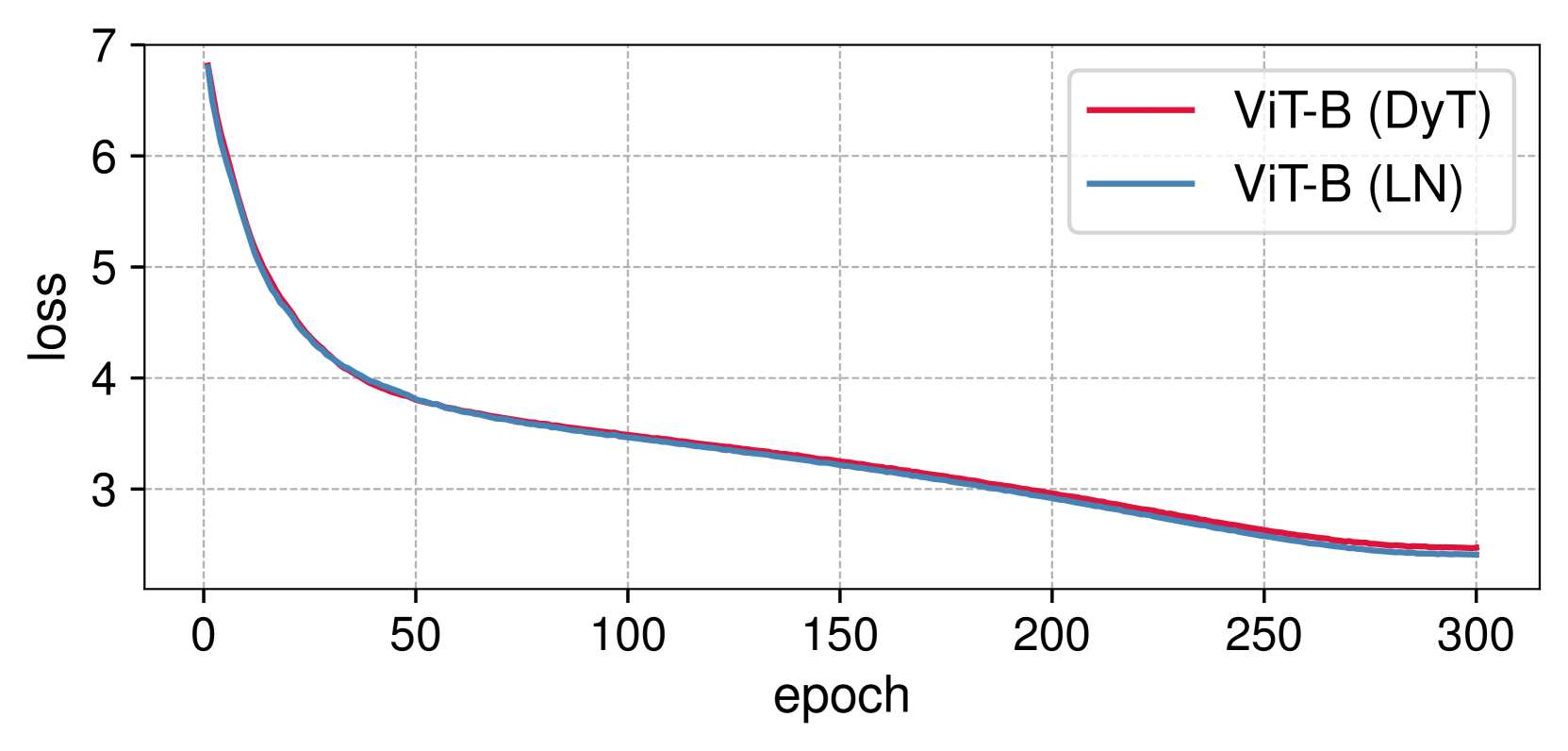

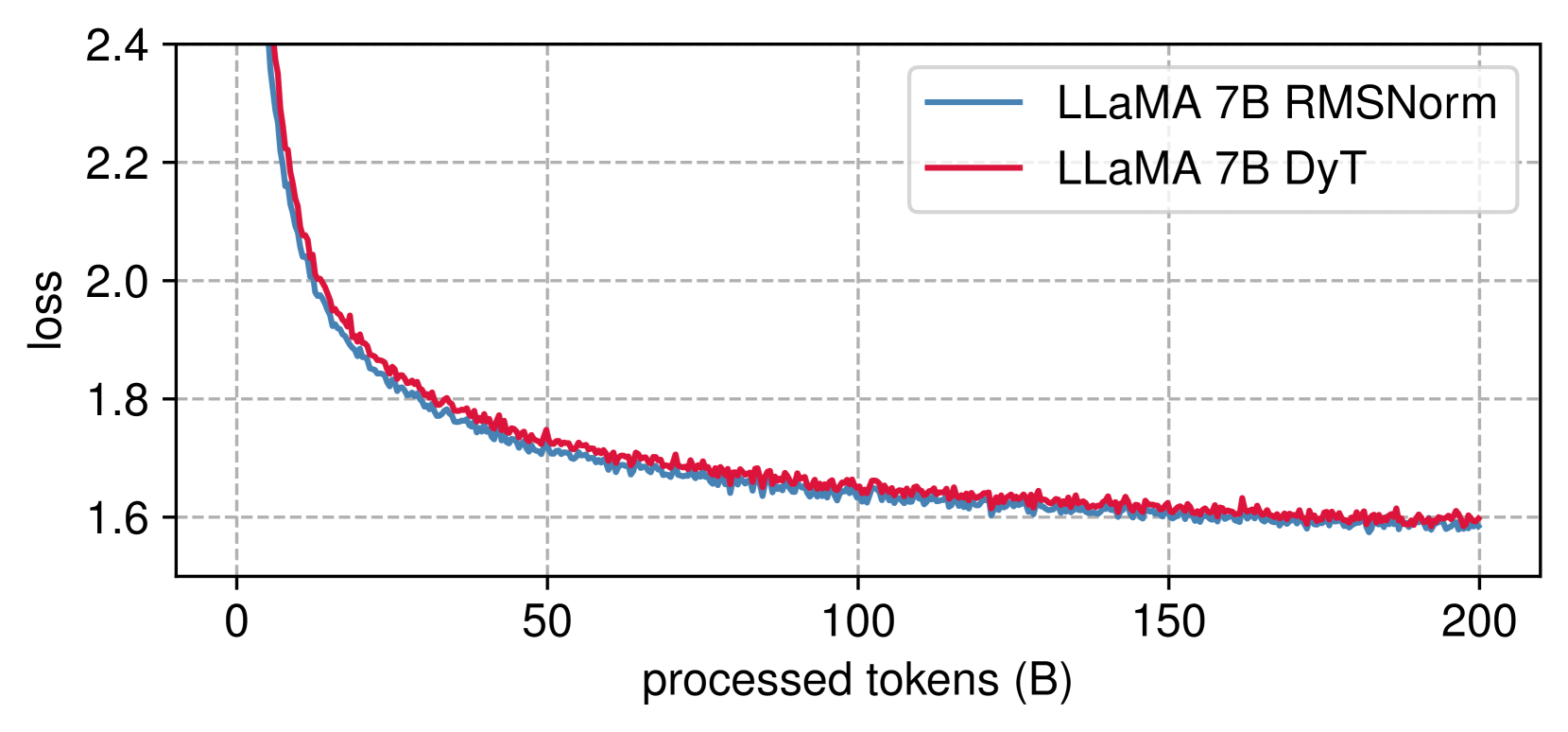

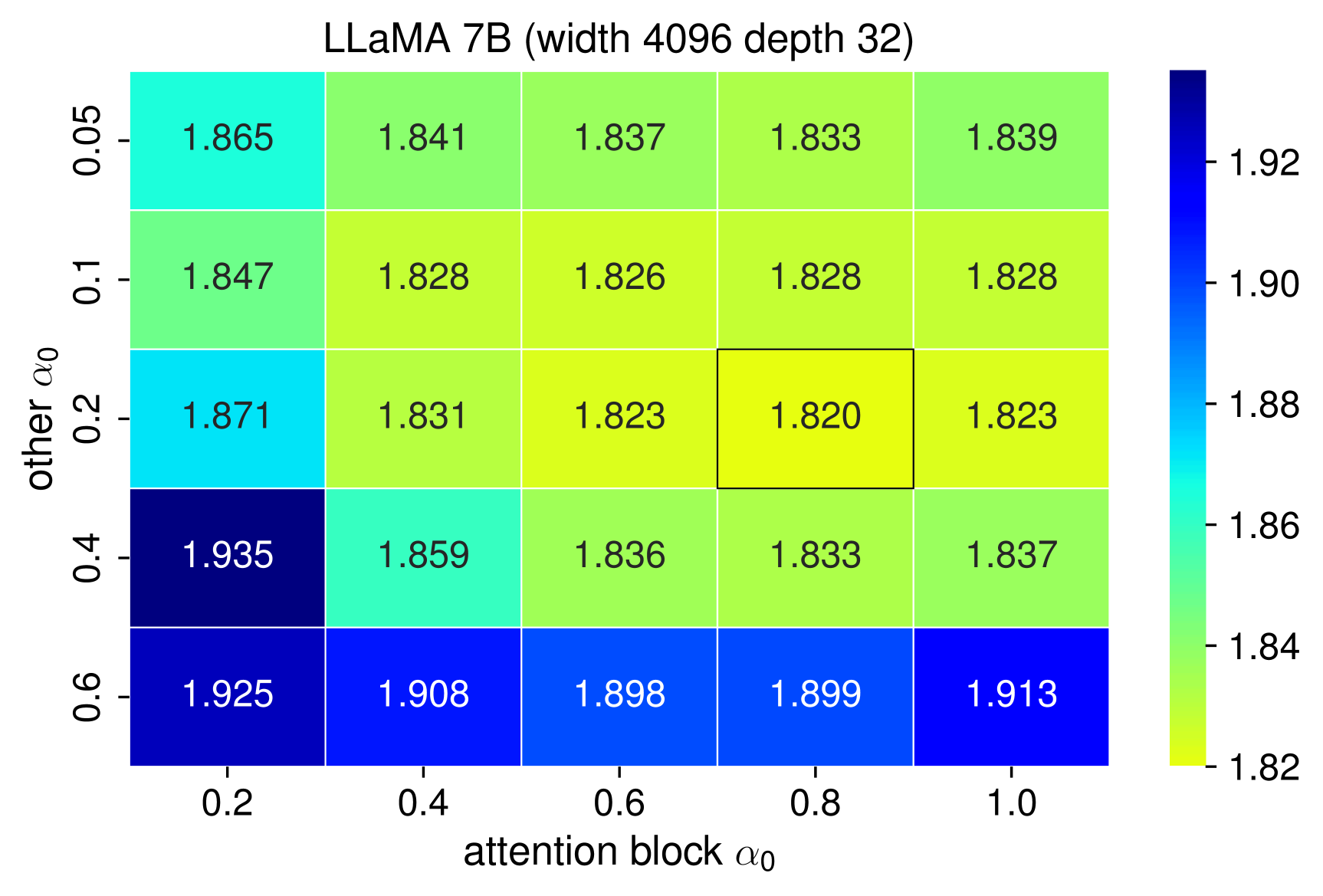

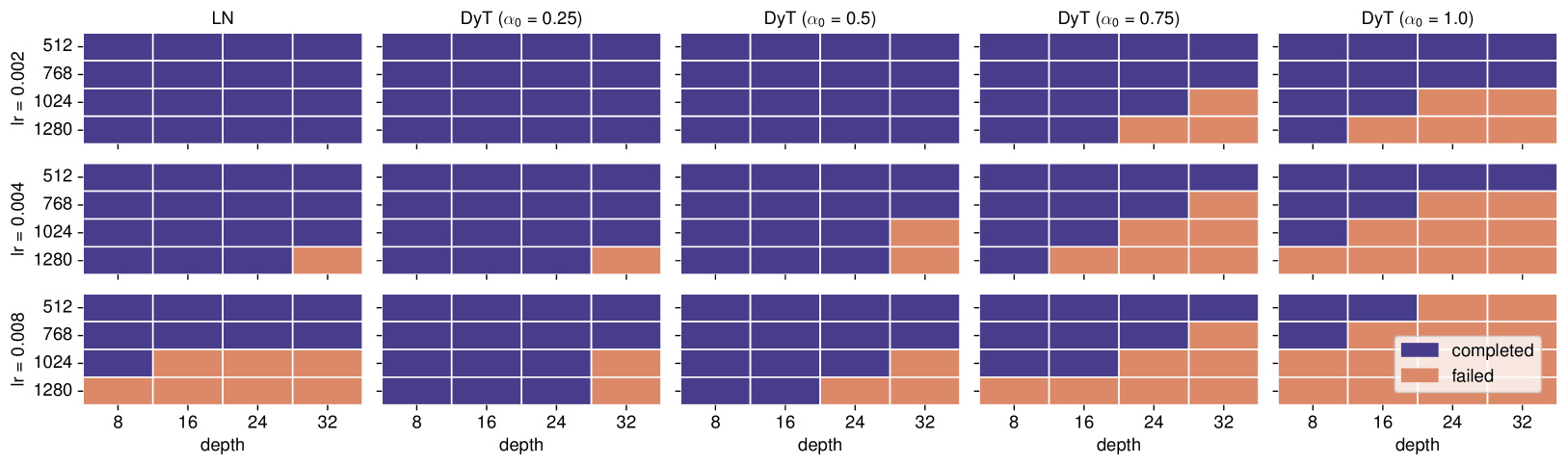

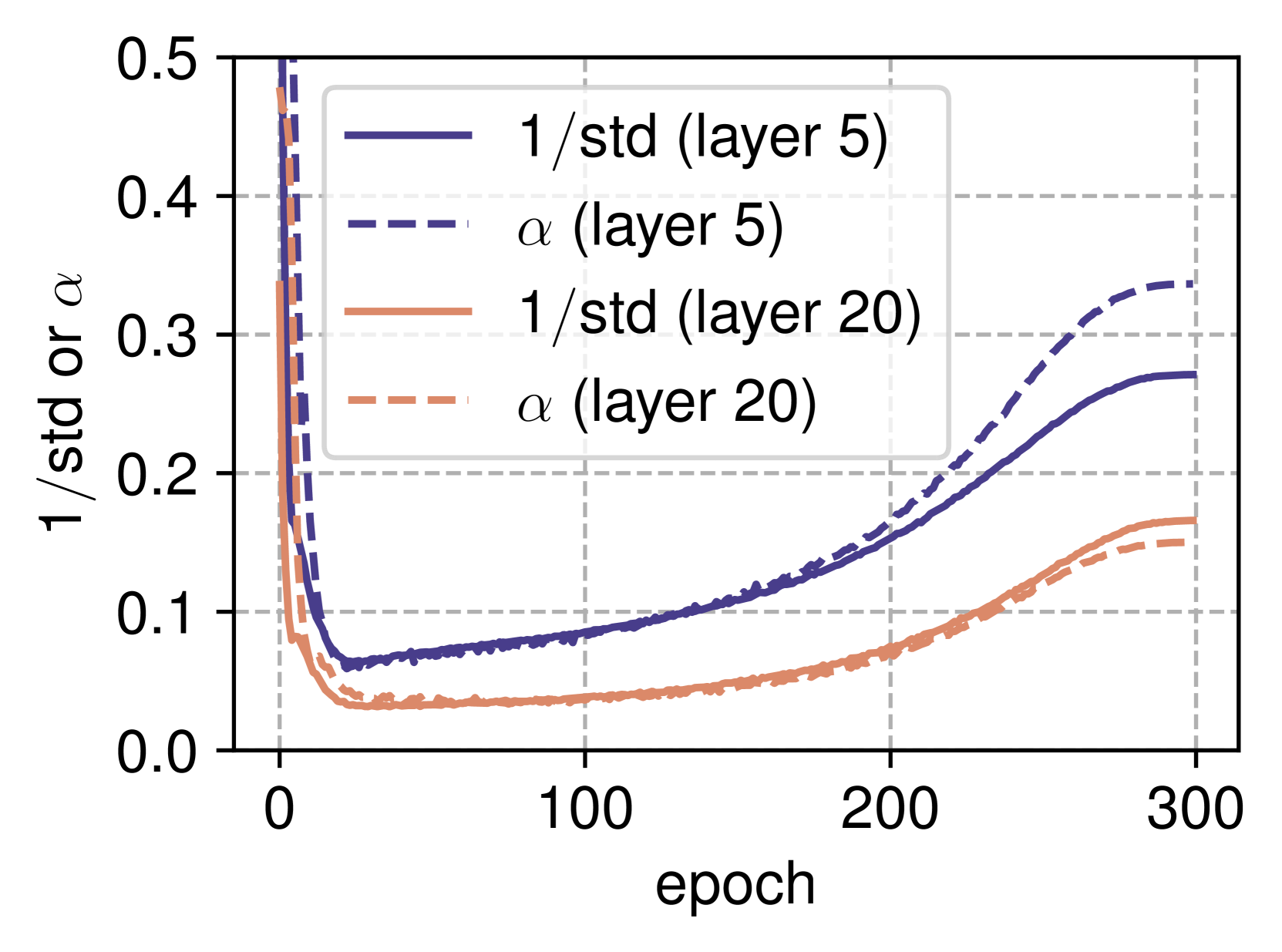

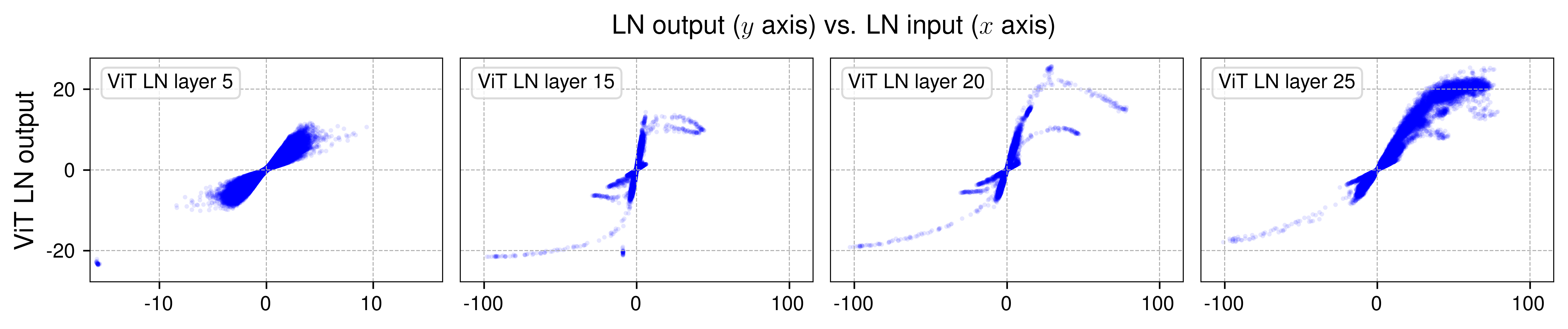

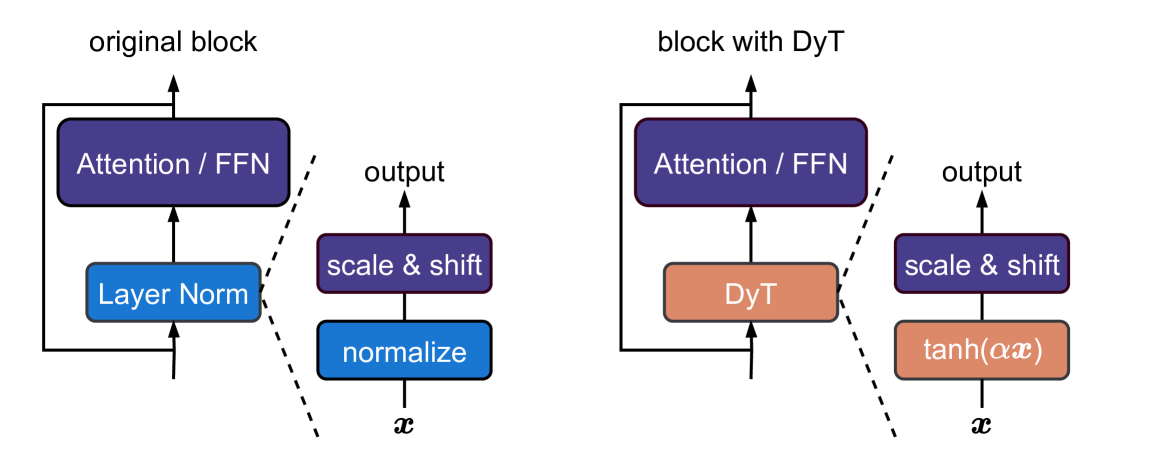

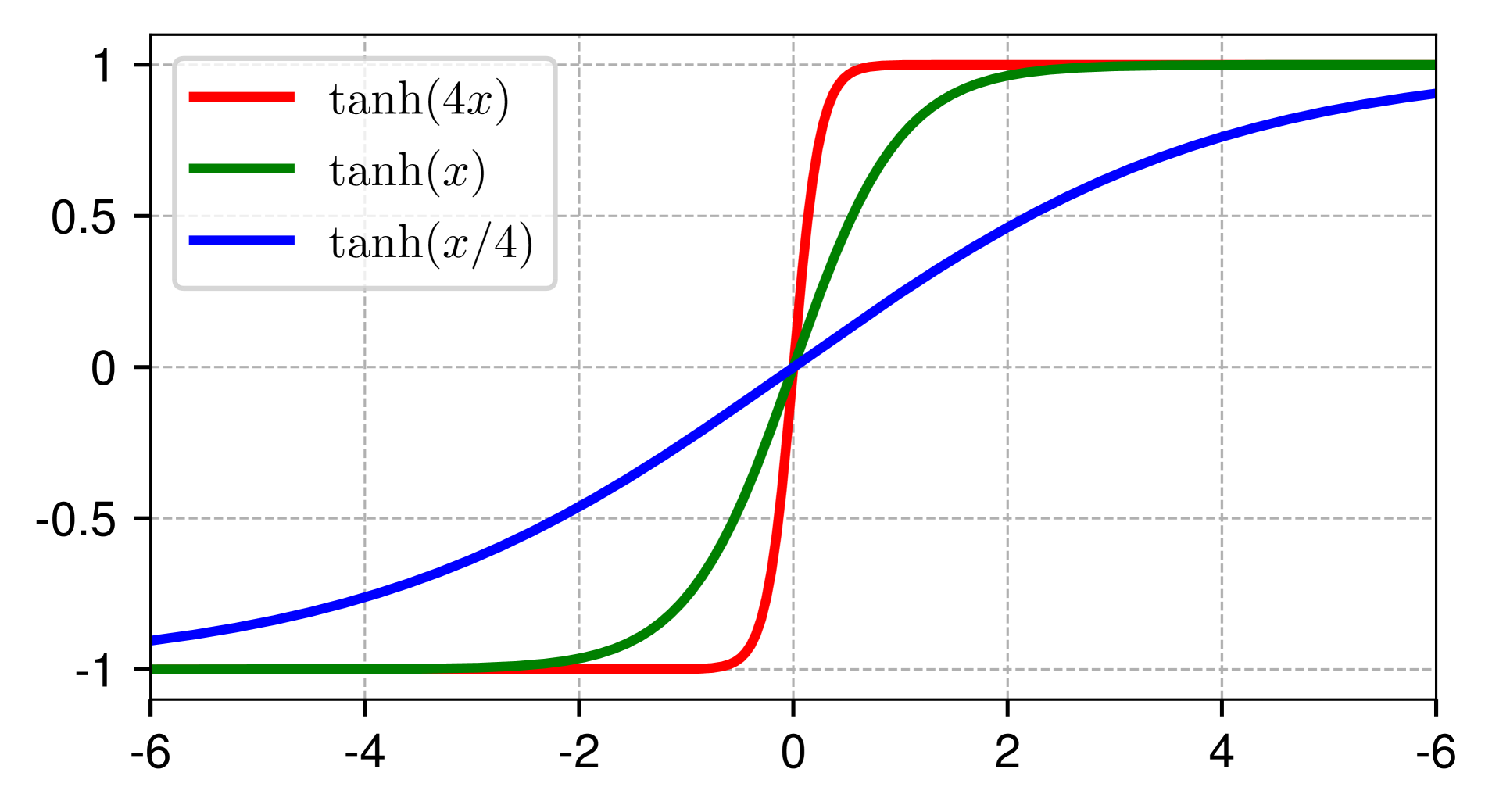

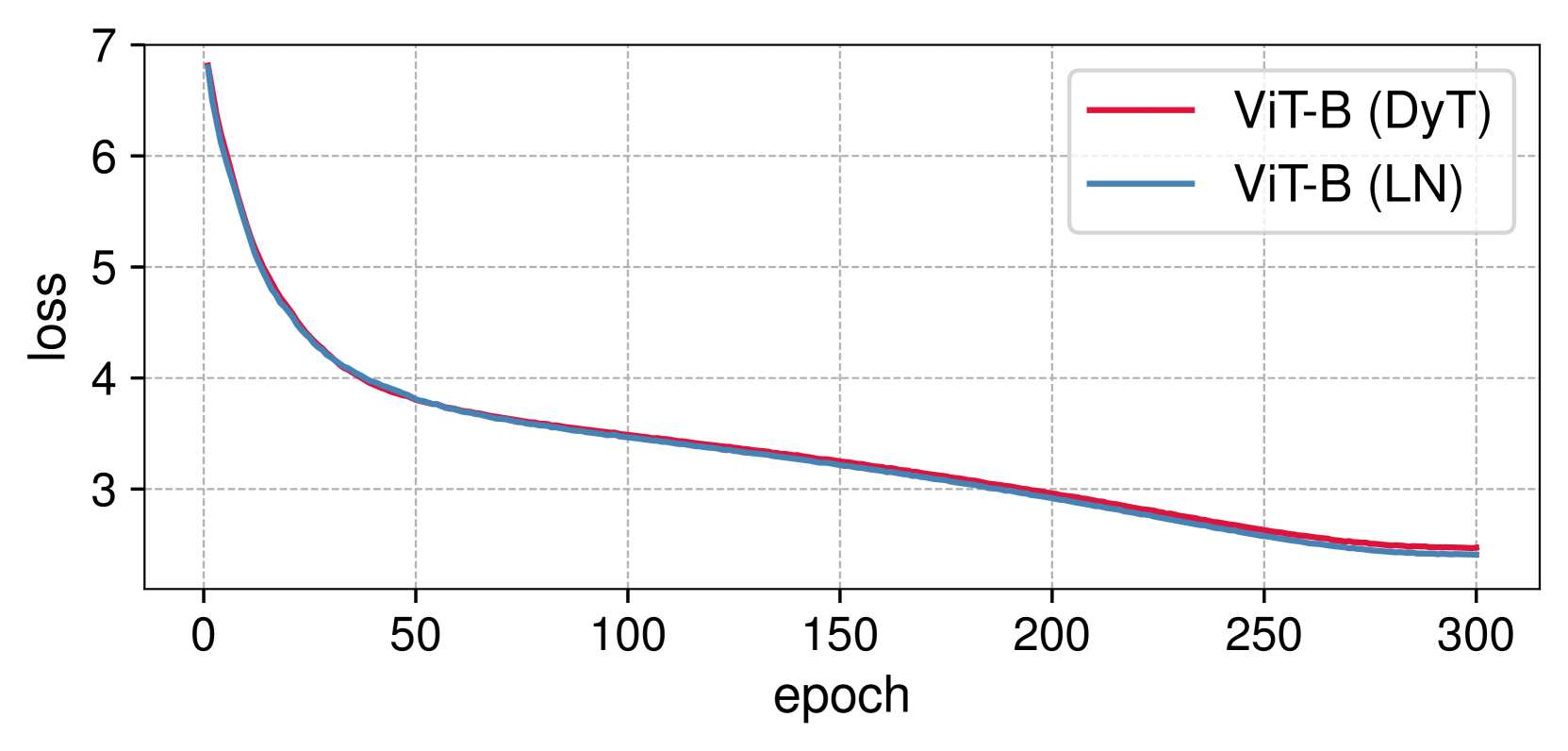

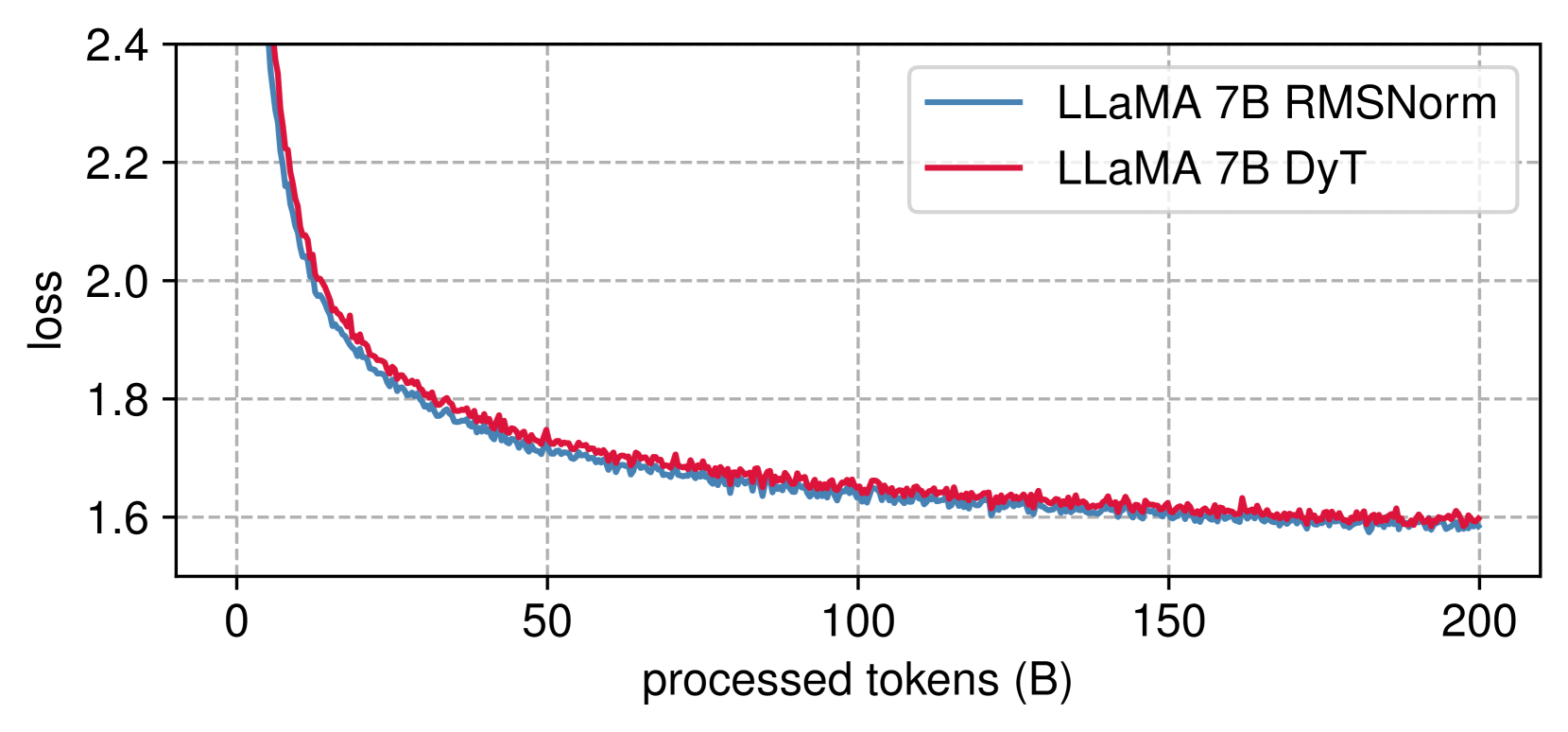

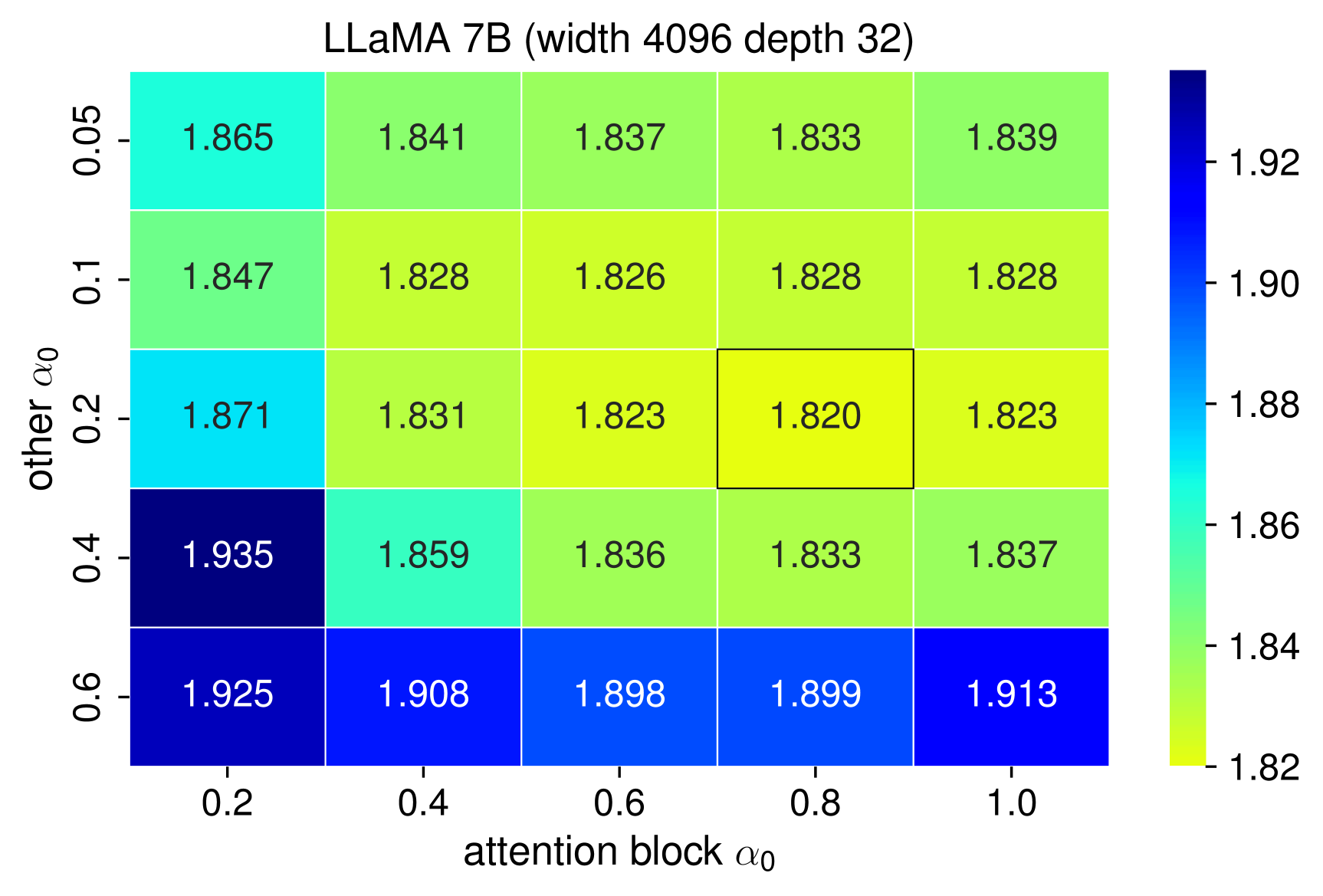

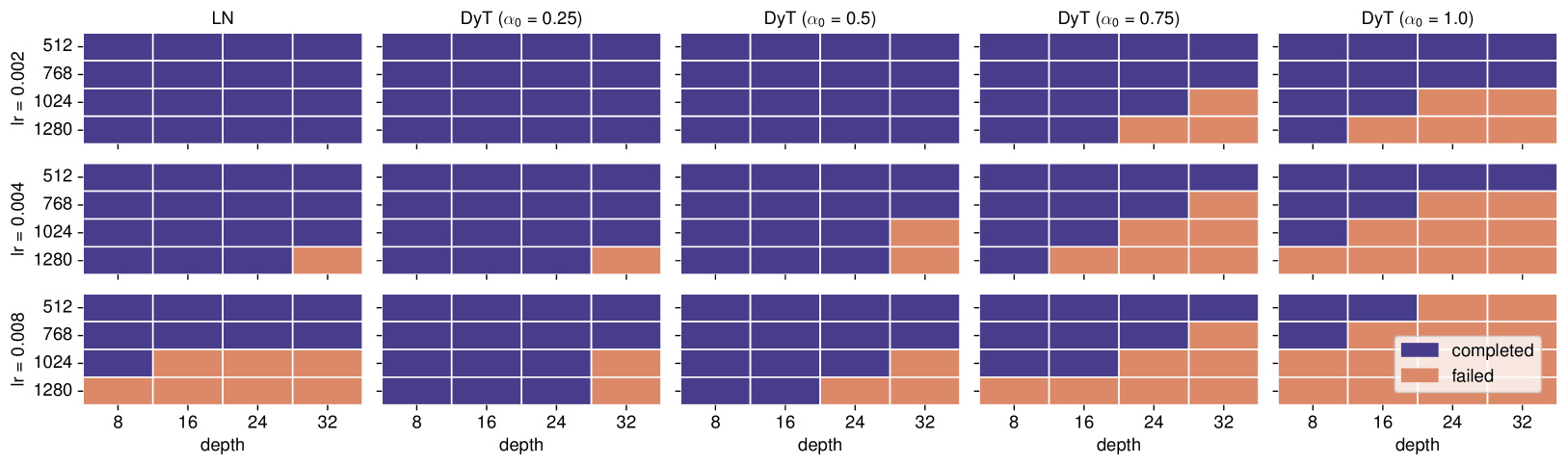

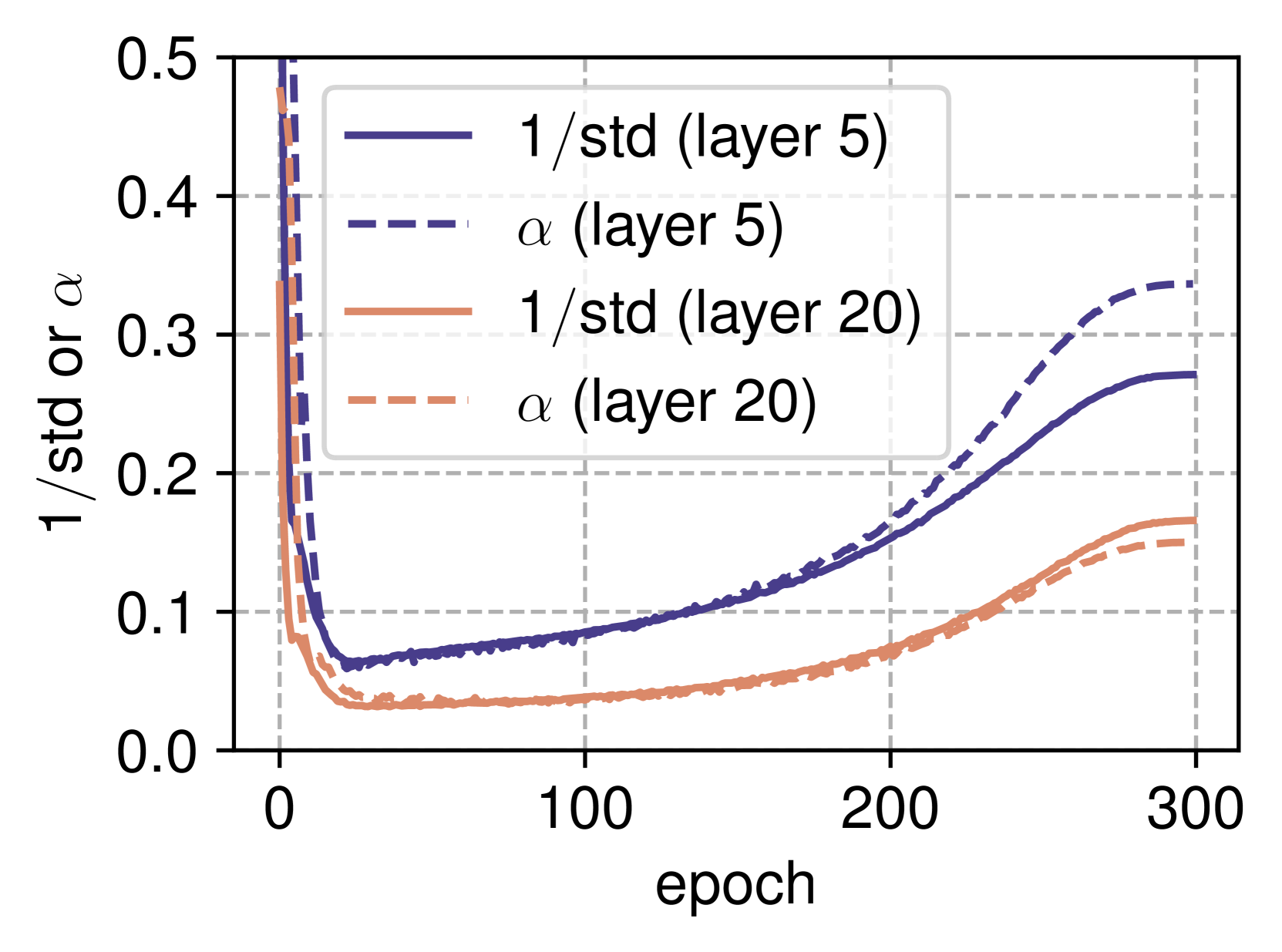

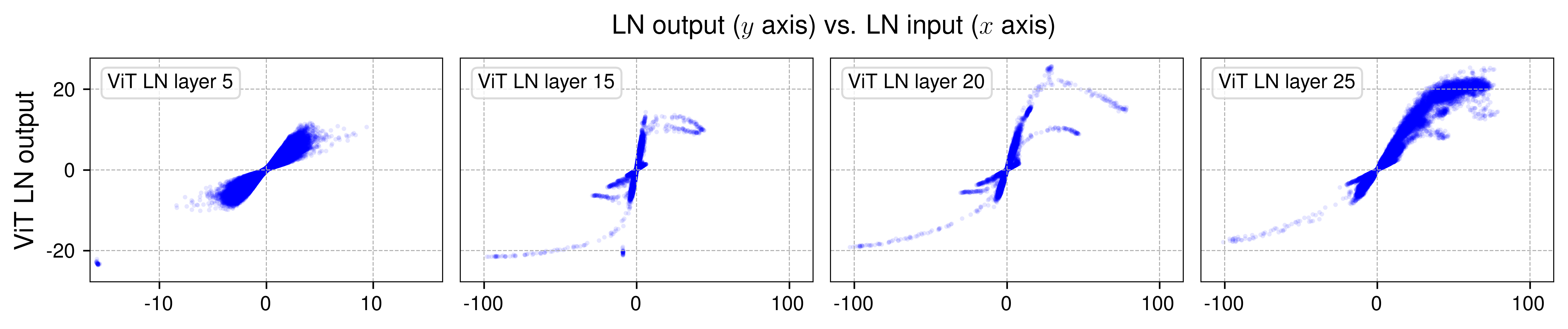

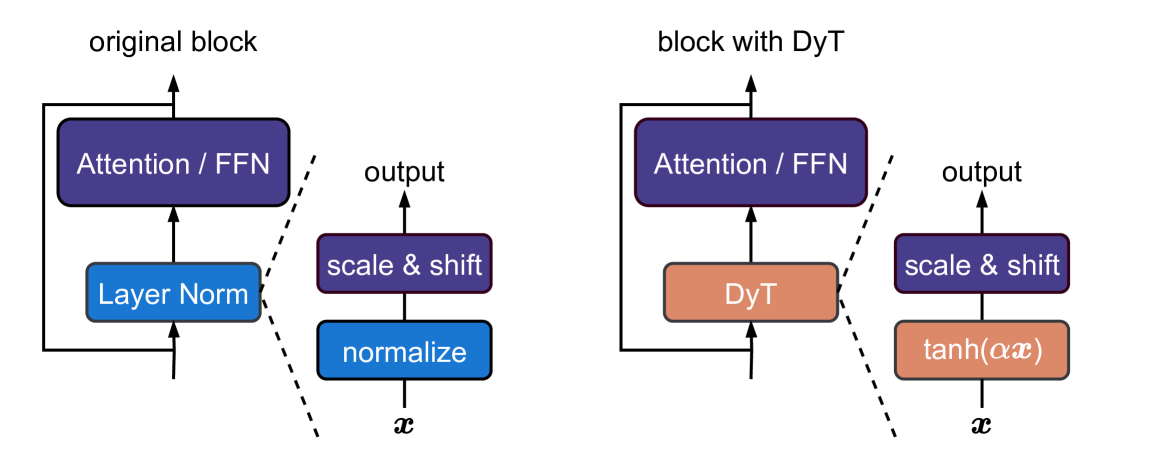

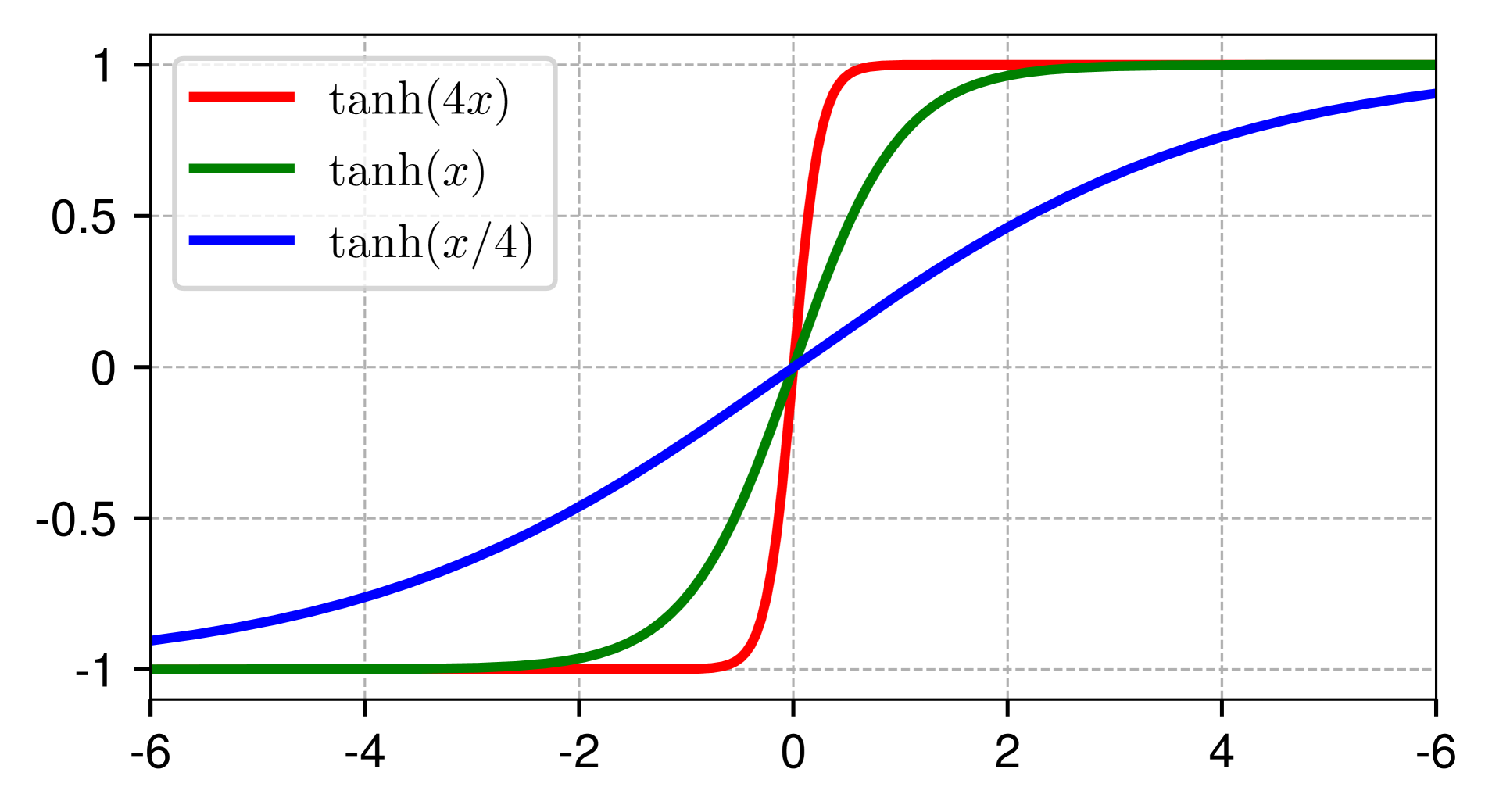

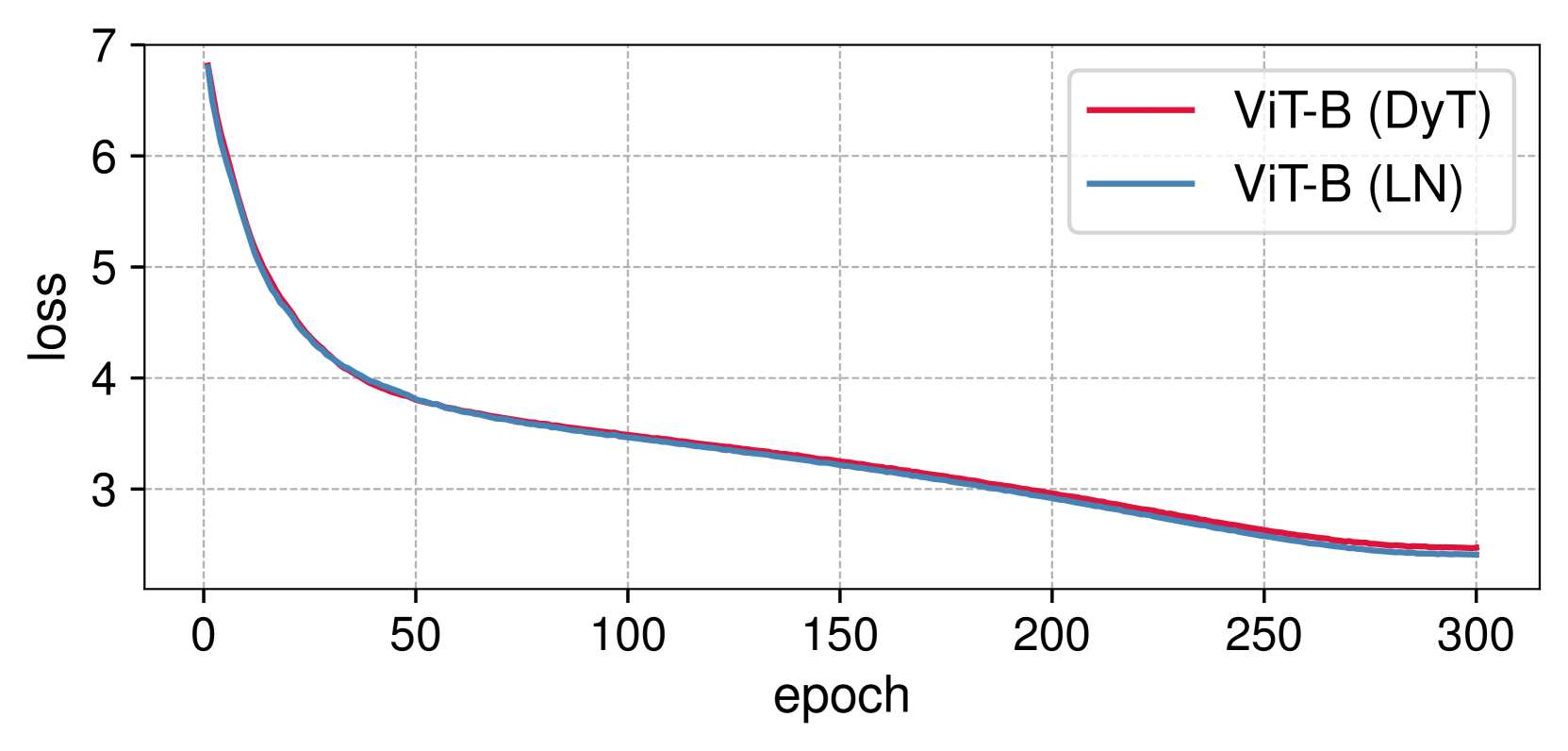

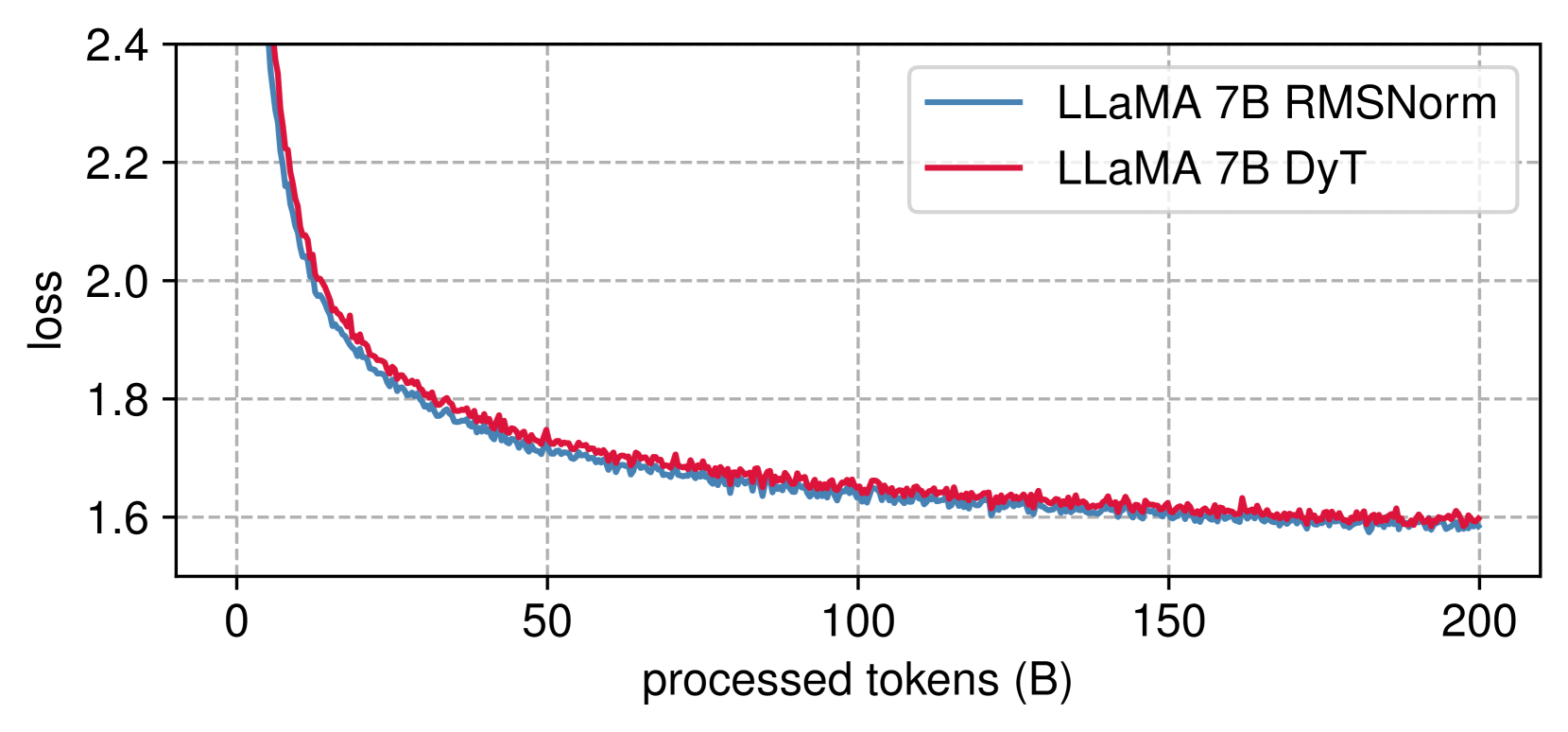

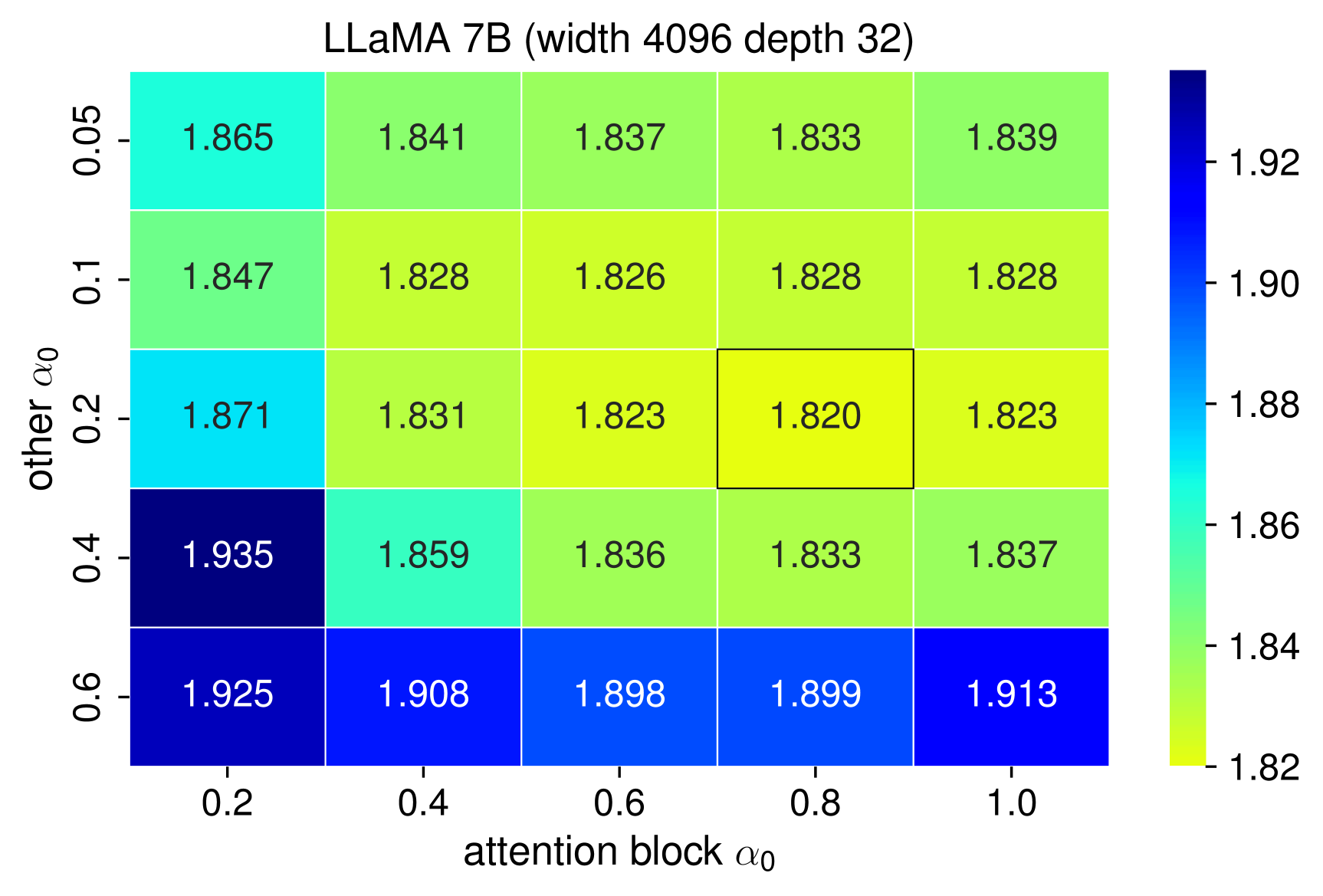

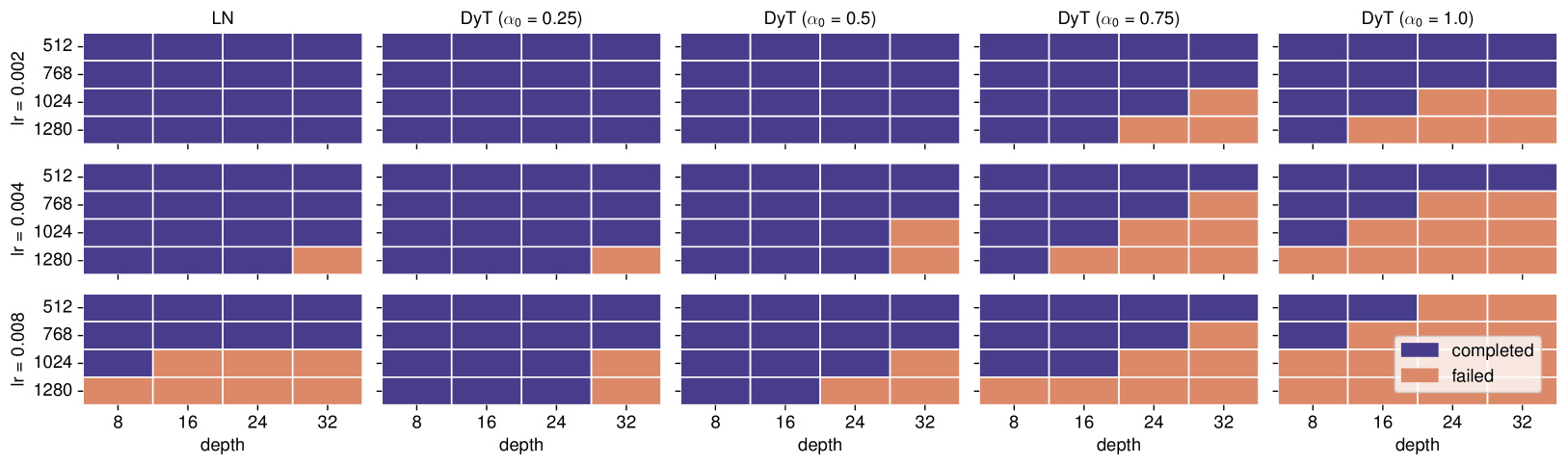

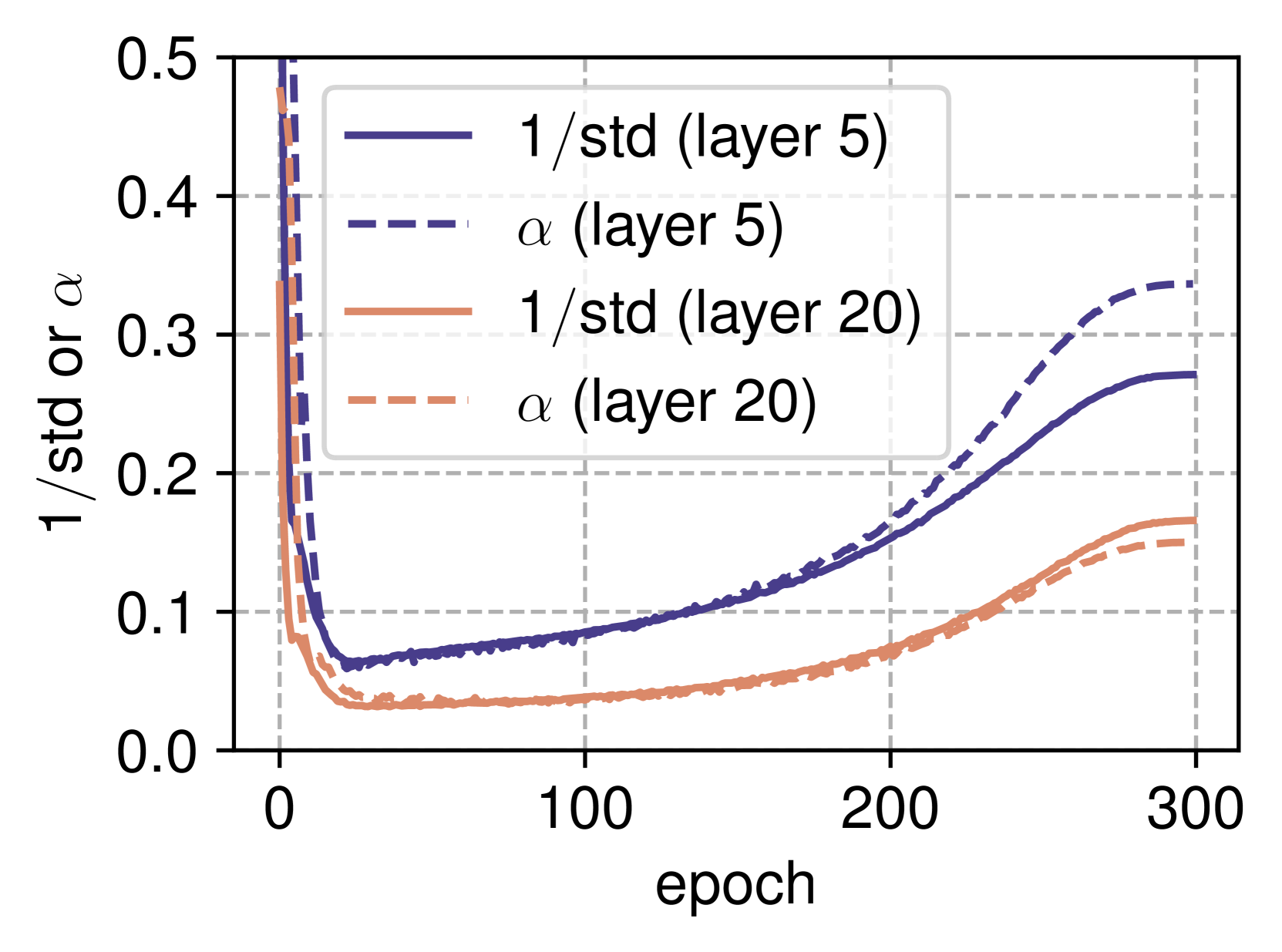

Transformers without Normalization | alphaXiv